“We don’t understand ourselves. That’s why we want the computer to do it for us”

Grzegorz J. Nalepa is a professor at the AGH University in Krakow, Poland who recently held a series of lectures at the Faculty of Computer Science. One of them was “Affective Computing, Context and Processing”. We interviewed him on the ethical questions posed by this new development in technology.

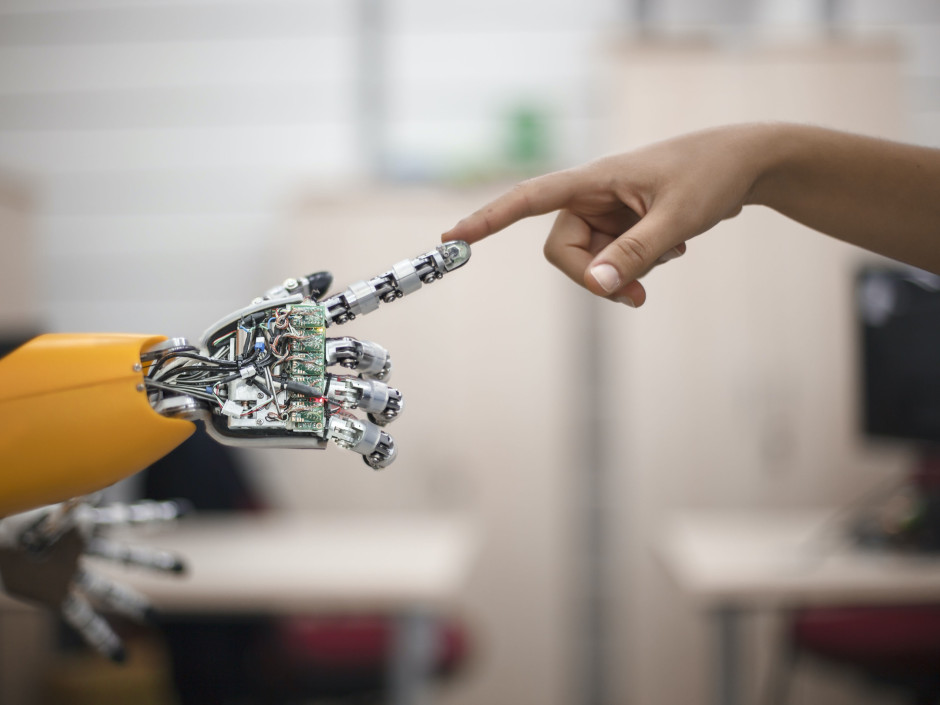

Computers and emotions. Two worlds that, except in some science fiction movie or book, most of us consider totally apart from each other. If this seemed to be true until some years ago, now the time has come when computers have begun to interact with humans, reading and reacting to their feelings and desires. In computer science, a whole scientific domain called “Affective Computing”, which explores this topic and relies heavily on psychology and neuroscience, is now firmly established.

You are a computer scientist but, at the same time, you have a master’s degree in Philosophy. What were the reasons for such an unusual choice?

Let’s say that I decided to approach Philosophy for intellectual pleasure, not for research reasons. When I completed my Master in Computer Science and Artificial Intelligence, I felt that, in some way, I needed to dedicate myself to something more profound, so I took up that study while attending a PhD in Computer Science.

During your stay at unibz, one of your scheduled lectures was on Affective Computing. What is it?

It’s a very broad topic and not entirely a new one. As a matter of fact, almost twenty years ago it was Rosalind Picard - the founder and director of the Affective Computing Research Group at the Massachusetts Institute of Technology - who proposed it for the first time. I think the simplest definition could be that of a domain where, on the one hand, computers are able to simulate and express emotions and, on the other hand, they can recognize and interpret human feelings. These are the two main aspects on which the research focuses nowadays. I work mostly on this second aspect.

Why should someone want computers to detect and interpret or simulate emotions? What’s the use of it?

From a business prospective, there is a huge, open market for such applications. Many companies are interested in the development of technologies that allow the recognition of feelings. Some of them are already doing it. We can think of the “like” buttons on Facebook, for example, which have expanded from the original thumbs up to other emotions.

What kind of applications do you refer to?

There are many different ones. For instance, in Computer Science we refer to “Sentiment Analysis“. It’s basically an analysis of a written text on Facebook, Amazon, a website or wherever. Fundamentally, you have a text, a computer and an algorithm: you run the algorithm on the text, whichshould tell you if it’s emotionally positive or negative. More advanced methods could describe a full range of different feelings. Recently there has been a lot of work on the automatic detection of hate speech, for example. Neuromarketing has a great interest in knowing the emotional state of a consumer in order to use this information for different marketing purposes. We could be getting different types of advertising based on our feelings - depending on whether we are sad or happy, we could get a suitable message. In general, marketing will be benefiting from the research in this area.

What about the second dimension of affective computing?

There could be many applications in care systems. These other applications are mainly oriented towards senior citizens. These do not necessarily have to be robots, maybe it could mean an affective communication system between a machine and a user. In this case we want the experience to be as friendly as possible and the system to be either sympathetic or compassionate. If the system can discover how we feel, maybe it could use a better tone and cheer us up!

Clearly there are important debates on possible ethical consequences of AI, rather than specifically on affective computing. But I don’t think such discussions should just be held among us computer scientists.

With such gross interference in our emotional world, are we not running the risk of constantly being manipulated by the market?

In general I agree that we’re too observed and monitored. There might be technologies that are invented for evil purposes but I’d say that most technologies are neutral. We can use affective computing in a positive way but, of course, we could use this information to mislead people.

What kind of ethical problems does this pose to a Computer Scientist?

We are, of course, aware that this whole domain could have a significant impact on our societies but scientists are rarely responsible for creating the final products for the market. It’s a long chain that involves people from business, politics and, finally, everyone of us. The problem is that society should understand how the technology works. But this is a general question that goes beyond mere Computer Science. It’s a problem that regards society as a whole. The same applies to cameras we have in the cities, to the monitoring of credit cards, smart phones, and other aspects of our everyday life and technology. Recently I’ve been reading and hearing that people are more and more worried about the development of Artificial Intelligence (AI). But I don’t think we need to be frightened. Fear is always a powerful tool on a commercial and a political level. We need to understand the potential of AI and cope with it; the same applies to Affective Computing.

Is there a debate on this topic in the community of computer scientists?

Clearly there are important debates on possible ethical consequences of AI, rather than specifically on affective computing. But I don’t think such debates should just be held among us computer scientists. In some countries there are foundations where people from different domains and groups of society meet to discuss ethical questions concerning scientific and technology issues. Computer scientists are mainly oriented towards getting technical results. It’s an issue we should try to approach together. Take the example of a self-driving car. Who’s responsible for its choices when it’s driving? We need to find those answers together. I think there’s great responsibility on people from the humanities – for example sociologists or philosophers - because they have the conceptual means which people from the technological sectors often lack. The ones who study human nature should also contribute to the creation of the right environment for this discussion.

Do you think that our society is ready to cope with such a challenge like the one represented by robots as substitutes for human beings?

It is likely these technologies would be developed anyway and it is certainly better for society to discuss these questions rather than be surprised when they finally become part of life. Basically, I think we’re developing general artificial intelligence because we don’t understand our own, as well as its relation to consciousness, so we want conscious and intelligent computers to solve this problem for us.

(Revision: Jemma Prior)

Related Articles

Tecno-prodotti. Creati nuovi sensori triboelettrici nel laboratorio di sensoristica al NOI Techpark

I wearable sono dispositivi ormai imprescindibili nel settore sanitario e sportivo: un mercato in crescita a livello globale che ha bisogno di fonti di energia alternative e sensori affidabili, economici e sostenibili. Il laboratorio Sensing Technologies Lab della Libera Università di Bolzano (unibz) al Parco Tecnologico NOI Techpark ha realizzato un prototipo di dispositivo indossabile autoalimentato che soddisfa tutti questi requisiti. Un progetto nato grazie alla collaborazione con il Center for Sensing Solutions di Eurac Research e l’Advanced Technology Institute dell’Università del Surrey.

unibz forscht an technologischen Lösungen zur Erhaltung des Permafrostes in den Dolomiten

Wie kann brüchig gewordener Boden in den Dolomiten gekühlt und damit gesichert werden? Am Samstag, den 9. September fand in Cortina d'Ampezzo an der Bergstation der Sesselbahn Pian Ra Valles Bus Tofana die Präsentation des Projekts „Rescue Permafrost " statt. Ein Projekt, das in Zusammenarbeit mit Fachleuten für nachhaltiges Design, darunter einem Forschungsteam für Umweltphysik der unibz, entwickelt wurde. Das gemeinsame Ziel: das gefährliche Auftauen des Permafrosts zu verhindern, ein Phänomen, das aufgrund des globalen Klimawandels immer öfter auftritt. Die Freie Universität Bozen hat nun im Rahmen des Forschungsprojekts eine erste dynamische Analyse der Auswirkungen einer technologischen Lösung zur Kühlung der Bodentemperatur durchgeführt.

Gesunde Böden dank Partizipation der Bevölkerung: unibz koordiniert Citizen-Science-Projekt ECHO

Die Citizen-Science-Initiative „ECHO - Engaging Citizens in soil science: the road to Healthier Soils" zielt darauf ab, das Wissen und das Bewusstsein der EU-Bürger:innen für die Bodengesundheit über deren aktive Einbeziehung in das Projekt zu verbessern. Mit 16 Teilnehmern aus ganz Europa - 10 führenden Universitäten und Forschungszentren, 4 KMU und 2 Stiftungen - wird ECHO 16.500 Standorte in verschiedenen klimatischen und biogeografischen Regionen bewerten, um seine ehrgeizigen Ziele zu erreichen.

Erstversorgung: Drohnen machen den Unterschied

Die Ergebnisse einer Studie von Eurac Research und der Bergrettung Südtirol liegen vor.