They’re secretly experimenting on us!

Michael Ennis, Didactic and Scientific coordinator for the English Language at the Free University of Bozen-Bolzano talks about the Language assessment at his University.

It seems that many new students and new teaching staff find unibz’s trilingual language model confusing. Do you think this is the case?

Well, the entrance and exit language requirements are clear enough to most members of the University community, but the several different types of language courses and tests a student encounters at unibz and the role each plays in learning languages and meeting the graduation requirements is not as intuitive.

For instance, students frequently ask questions such as: “I passed the C1 exam at the Language Centre; why can I not pass my B2 course in the Faculty of Economics and Management?” Or: “I passed a C1 end-of-course test at the Language Centre; why do I still have to certify B2 by taking another exam?”.

So you think the confusion is because unibz has too many types of language courses and exams?

Not at all. Contrary to popular belief, unibz is not the setting of a Franz Kafka novella about the absurdity of learning languages during the age of the Common European Framework of References, where the only reward for passing a “language test” today is permission to take a much more difficult “language exam” tomorrow in the perpetual pursuit of the elusive “C2”. Each language course and each language test in fact serves a distinct purpose within the University’s language model, and the model as a whole has the best interest of students in mind. The confusion is two-fold. First, in language assessment there is no universal distinction between the words “exam” and “test”, other than that the word “exam” sounds more “official” or “bigger”, but here at unibz we use these words to refer to different experiences. Second, there are many different types of language “tests” or “exams”. In fact, most students will encounter at least four types during their academic careers at unibz: placement tests, diagnostic tests, achievement tests, and proficiency exams.

Do you think that part of the problem is that language teachers and students experience tests from different perspectives?

Absolutely. From the perspective of students, the purpose of any language test is simply to “pass the test”. But from the perspective of language teachers and language examiners, a language test is essentially a field experiment. The goal of the experiment is to elicit a sample of a student’s language knowledge and/or language skills in order to answer a specific research question. The research question the examiner is asking determines both the purpose and the design of the test.

Could you elaborate?

Sure. If I wanted to know the average height of Italian women from South Tyrol, I would likely not have the time and resources to measure the height of every single woman living in South Tyrol. I would have to take a “representative sample”, for example 30 randomly selected women. I would also need instruments and units of measurement, procedures for data collection, and methods for data analysis. For instance, I could physically measure the height of each “participant” using a metric tape measure and then record this data in Microsoft Excel in order to perform statistical analyses. In the case of a language test, the object of study is the language knowledge and skills of students. The teacher cannot test the students on every aspect of the language, so he or she has to choose a representative sample of the language to include on the test. The instrument for data collection is the test itself. The procedures are the way in which the exam is administered. The units of measurement are the assessment criteria and grading rubrics. And the method of data analysis is likewise to enter the scores into a spreadsheet in order to perform statistical analyses to ensure that the results are reliable and valid. What varies from one type of language exam to the next is simply the research question.

So, what is the research question in a placement test?

In which language course should this student enroll? The purpose is simply to “place” a student in a particular course based upon some standard measure of the student’s pre-existing language competency, usually based on a scale of possible scores. For the sake of efficiency, a good placement test requires no more than 20 or 30 minutes to complete and will therefore typically only test a student’s knowledge of a small sample of the grammar and vocabulary taught across all language courses offered within a language curriculum. The goal is not to discover how much a student has learned, to-date, or how much a student knows, per se, but to place the student with peers who have very similar scores and presumably similar competencies in the language. This is why you cannot “fail” a placement test.

What is the research question in a diagnostic test?

What are this student’s strengths and weaknesses? A diagnostic test typically occurs at the beginning of a course (i.e., after students have been placed) and is typically organized by the teacher of the course. The purpose is to help the teacher determine what language and language skills should be taught to this particular group of students enrolled in this particular course, because all students have different learning needs. Like a placement test, there is no passing or failing a diagnostic test. Unlike a placement test, a diagnostic test will only test students on a sample of the content the teacher deems appropriate for the course. If the goal of the course is also to develop speaking and writing skills, for example, then the teacher might also test the students’ knowledge of or ability to produce particular genre of speaking and writing. In this case, a diagnostic test can require an hour or more. After analyzing the results, the teacher will tend to focus instruction on the most common weaknesses of the students.

What about an achievement test?

The question is: To what extent has the student met the learning objectives of this course? At unibz there are actually two types of language achievement tests: the “final exams” administered at the end of degree courses, which award academic credit, and the “end-of-course tests” administered during the optional language courses organized by the Language Centre. Unlike a placement or diagnostic test, a good achievement test does not test the students’ current knowledge and skills. A good course test does not even really test how much students have learned since the beginning of a course—because, especially with languages, most learning occurs informally outside the course. A good course test only tests the students on how much they have learned of the content that was explicitly taught during a course. Obviously it is impossible to test the students’ knowledge of or ability to do everything taught during an entire course, so the instructor will again only test a sample. But the instructor wants to ensure that the sample is representative of the learning objectives. This is why a course test tends to have more content than a placement or diagnostic test and can require two hours or more.

And a proficiency test?

There are two possible research questions guiding a proficiency test. It could be: What is the current proficiency level of this learner? Or it could be: Has this learner achieved this particular level? A proficiency test (what we call a “language proficiency exam” at unibz) does not sample the language taught during a course or even across a curriculum. A proficiency exam samples from a much larger “population”. Some proficiency exams, such as the Cambridge exams for English, the CELI exams for Italian, the Test DAF for German, or the exams offered by unibz, will sample from all the language a learner should know and be able to use at a particular level on a particular proficiency scale (in our case the CEFR). For example, the CAE exam tests if a learner is at the C1 level or not. For this type of proficiency exam, you receive either a “passing” or a “failing” score. Other exams, such as IELTS for English, will sample from the entire language in order to pinpoint your current level. For this type of exam there is no “passing” or “failing” score, because every possible score is associated with a specific level on a proficiency scale. Since the “population” of all words and grammatical utterances possible in a language is immense, the sample must be rather large, and this is why most proficiency exams require as many as four hours to complete.

And are these different types of tests ever interchangeable?

Sometimes. Because of their very small sample sizes, placement and diagnostic tests can only be used for their stated purposes. But course tests and proficiency exams can have other limited applications. Course tests and proficiency exams are also used for placement within our curriculum at the Language Centre, and they might also be used for diagnostics, if you have access to a student’s correct and incorrect responses. However, a proficiency exam can never replace a course test and a course test can never replace a proficiency exam. The former would be like taking a random sample of 400 women from the entire world in order to estimate the average height of women in South Tyrol! The latter would be like taking a random sample of 30 women from South Tyrol in order to estimate the average height of women in the entire world!

Related Articles

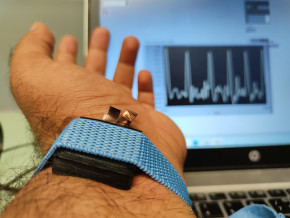

Tecno-prodotti. Creati nuovi sensori triboelettrici nel laboratorio di sensoristica al NOI Techpark

I wearable sono dispositivi ormai imprescindibili nel settore sanitario e sportivo: un mercato in crescita a livello globale che ha bisogno di fonti di energia alternative e sensori affidabili, economici e sostenibili. Il laboratorio Sensing Technologies Lab della Libera Università di Bolzano (unibz) al Parco Tecnologico NOI Techpark ha realizzato un prototipo di dispositivo indossabile autoalimentato che soddisfa tutti questi requisiti. Un progetto nato grazie alla collaborazione con il Center for Sensing Solutions di Eurac Research e l’Advanced Technology Institute dell’Università del Surrey.

unibz forscht an technologischen Lösungen zur Erhaltung des Permafrostes in den Dolomiten

Wie kann brüchig gewordener Boden in den Dolomiten gekühlt und damit gesichert werden? Am Samstag, den 9. September fand in Cortina d'Ampezzo an der Bergstation der Sesselbahn Pian Ra Valles Bus Tofana die Präsentation des Projekts „Rescue Permafrost " statt. Ein Projekt, das in Zusammenarbeit mit Fachleuten für nachhaltiges Design, darunter einem Forschungsteam für Umweltphysik der unibz, entwickelt wurde. Das gemeinsame Ziel: das gefährliche Auftauen des Permafrosts zu verhindern, ein Phänomen, das aufgrund des globalen Klimawandels immer öfter auftritt. Die Freie Universität Bozen hat nun im Rahmen des Forschungsprojekts eine erste dynamische Analyse der Auswirkungen einer technologischen Lösung zur Kühlung der Bodentemperatur durchgeführt.

Gesunde Böden dank Partizipation der Bevölkerung: unibz koordiniert Citizen-Science-Projekt ECHO

Die Citizen-Science-Initiative „ECHO - Engaging Citizens in soil science: the road to Healthier Soils" zielt darauf ab, das Wissen und das Bewusstsein der EU-Bürger:innen für die Bodengesundheit über deren aktive Einbeziehung in das Projekt zu verbessern. Mit 16 Teilnehmern aus ganz Europa - 10 führenden Universitäten und Forschungszentren, 4 KMU und 2 Stiftungen - wird ECHO 16.500 Standorte in verschiedenen klimatischen und biogeografischen Regionen bewerten, um seine ehrgeizigen Ziele zu erreichen.

Erstversorgung: Drohnen machen den Unterschied

Die Ergebnisse einer Studie von Eurac Research und der Bergrettung Südtirol liegen vor.